For the past few years IoT has become the hot topic in the technology world. This led the evolution of Edge computing to gain momentum and introduced new technologies. In mid 2017 Google announced the Tensorflow lite, which is an advanced and lightweight version of it’s predecessor Tensorflow mobile(Google is planning to replace Tensorflow mobile with Tensorflow lite in future).

What is Edge computing ???

Edge computing is a method of optimizing cloud computing systems “by taking the control of computing applications, data, and services away from some central nodes (the “core”) to the other logical extreme (the “edge”) of the Internet” which makes contact with the physical world. This reduces the communications bandwidth needed between systems under control and the central data centre by performing analytics and knowledge generation where data comes in from the physical world via various sensors and actions are taken to change physical state via various forms of output and actuators. (Source: Wikipedia)

Why Edge computing ???

- In present most of the mobile and embedded devices comes with high performing hardware acceleration which will be helpful to make powerful On-Device computations.

- This is the era of IoT and it will reduce the consumption of cloud intelligence by implementing intelligence On-Device.

- User data privacy will be protected when the user data is not sent out from the device.

- More importantly ability to serve end users in “offline” mode without connecting to internet.

- Edge computing (Fog computing) will be the next big thing as it will help to perform data processing at the edge of network and near to source of data. This will reduce the network bandwidth and keep data and computations away from a centralized location.

Edge meets Machine Learning

The number of applications available in machine learning is vast and it is being used in many industries. Such applications are image recognition, speech recognition, classification, medical diagnosis and many more. With the advancement of mobile devices, the Machine Learning applications can be fitted in to them.

Ex: IoT device(Surveillance Drone) fully equipped with Machine Learning application which will predict potential crime in real time by monitoring human behaviour.

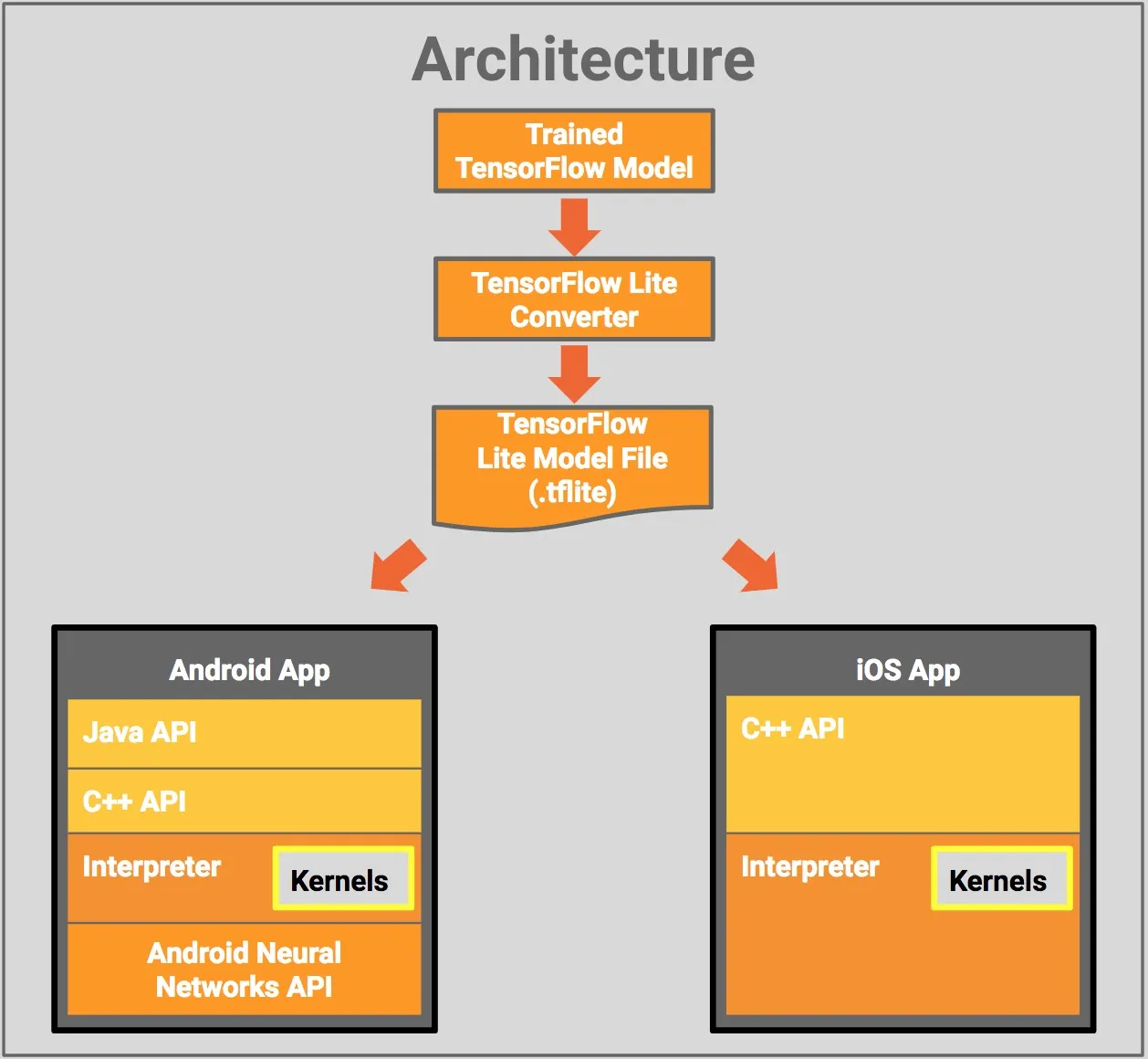

Understanding TFlite

TFlite supports set of core operations both floating-point(float32) and quantized (uint8), which has been tuned for mobile platforms. Since the set of operations supported in TFlite is less compared to Tensorflow’s, not every model is convertible to TFlite. Tensorflow plans to expand the list of operations supported for TFlite in future. As of now the best way is to carefully consider how operations are converted and optimized when building a tensorflow model to be used with TFlite.

- TFlite defines a new model file format based on Flatbuffers, where tensorflow uses Protocol Buffer. (Efficient cross platform serialization library)

- If the device has support, TFlite provides an interface to leverage the hardware acceleration using Android Neural Networks library.(Android O-MR1, API Level 27)

- Tensorflow now optimized for the Hexagon 682 DSP.

- TFlite has new mobile optimized interpreter, which will keep the apps lean and faster. The interpreter uses Static Graph Ordering and a custom memory allocator.(In SGO you should draw the graph completely and then inject data to run. While in Dynamic graphs the graph structure is defined on the fly. Static Graph will enable lot of convenient operations such as storing fixed graph data structure, shipping models independent of code and performing graph transformations. Static Graph is better to use if you can distribute the computations over multiple machines.)

Next : Training custom model and converting to TFlite

Sources

Wikipedia : https://en.wikipedia.org

Tensorflow : https://www.tensorflow.org/mobile/tflite

IEEE Xplore : https://ieeexplore.ieee.org